Reviving the Craft: Rediscovering the Art and Science of High-Performance Coding

In my previous post, I examined the implications of technical debt and the challenges associated with not addressing it promptly and transparently. One prevalent form of technical debt is code plagued by performance issues.

The skills to develop high-performance code and identify patterns that adversely affect performance are fading in our profession.

Several factors contribute to the decline in this skill, one of which is the increasing prevalence of high-level managed languages such as C#. These languages simplify development by abstracting performance considerations, leading developers to overlook the impact of their code on performance. Consequently, developers who primarily or exclusively work with these high-level languages often lack awareness of available more efficient techniques and the performance ramifications of commonly used poor patterns.

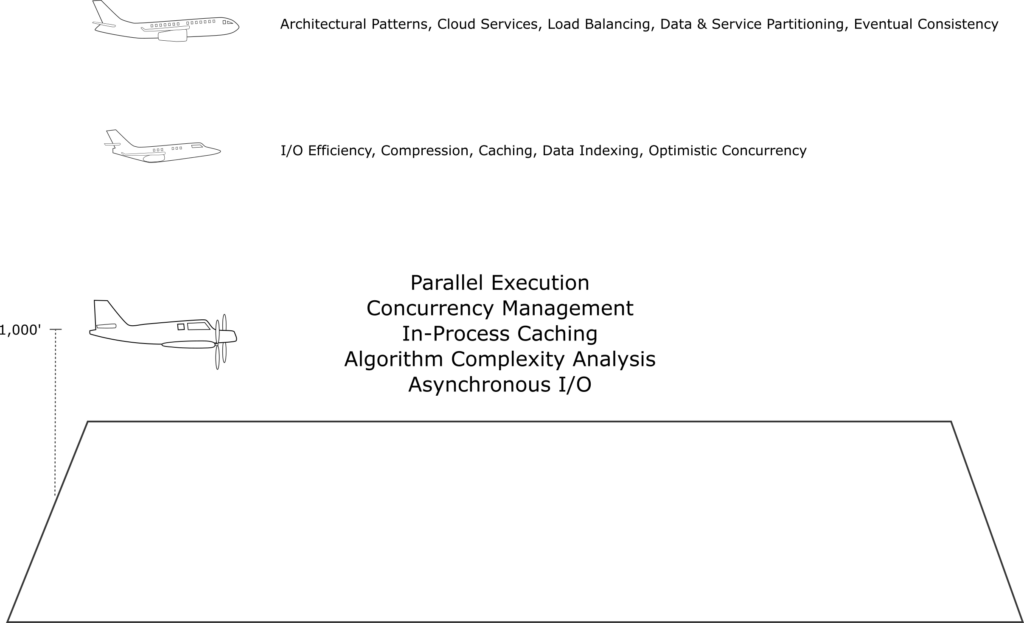

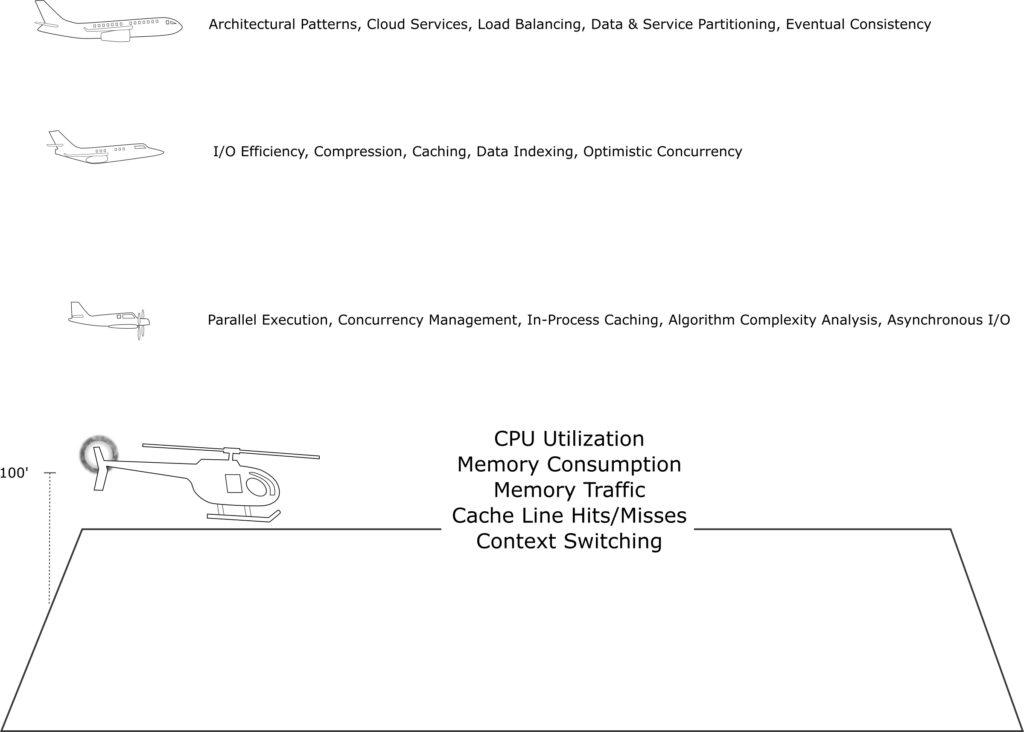

In software engineering, performance optimization can be likened to viewing a landscape from varying heights. Each perspective offers unique insights and strategies for improving efficiency and functionality. In this post, I explore these different viewpoints, ranging from the broad 40,000-foot view to the fine-grained 100-foot view, and understand how they contribute to managing software performance effectively. I will highlight the importance of redeveloping the skill of writing high-performance code and managing compute resources at even the lowest levels.

Expecting More

As software engineers, we rely on various runtimes, compilers, build pipeline tools, library dependencies, operating systems, hardware, and cloud services, expecting optimal performance from all these components. While we demand continuous improvements from those providing these foundational services, we often fail to hold ourselves to the same high standards. This is particularly evident in vertical line-of-business engineering teams. Our customers deserve the same level of excellence that we demand from others, and it begins with expecting more from ourselves.

This self-expectation is crucial as it sets the foundation for performance improvement at every level. By raising our standards, we elevate the quality of our work and contribute to a more reliable and efficient software ecosystem.

To achieve this, we must adopt a comprehensive approach encompassing various perspectives on performance management, ensuring that every aspect is meticulously considered and refined, from broad architectural decisions to the finest CPU and resource optimizations.

Performance and Scalability: Interconnected Yet Distinct

Performance and scalability are two fundamental aspects of software engineering that, although closely related, possess distinct characteristics that require different management techniques. Performance refers to how efficiently a system or application executes its tasks, often measured by response times, throughput, and resource utilization. Scalability, on the other hand, refers to a system’s ability to handle increased load or expansion, measured by its capacity to maintain performance levels as demand grows.

Understanding the interplay between performance and scalability is crucial for optimizing software systems. Enhancing performance typically involves refining algorithms, enhancing code efficiency, and optimizing resource utilization. These improvements contribute to better execution speed and responsiveness, directly benefiting scalability by enabling the system to handle more concurrent tasks without degradation.

Conversely, scalability techniques such as load balancing, distributed computing, and horizontal scaling bolster overall performance. By distributing the workload across multiple resources, these methodologies prevent bottlenecks and ensure consistent performance under varying loads. This symbiotic relationship means that focusing on one aspect often has a positive influence on the other; a more performant system tends to scale better, and scalable solutions inherently improve system-wide performance.

However, the nuanced differences between these two concepts necessitate distinct approaches. Optimizing performance often requires a fine-grained, detail-oriented process with thorough analysis and targeted enhancements. While scalability benefits from performance improvements, it demands broader architectural strategies that accommodate growth and variability. In summary, while performance and scalability impact one another, they each require specialized techniques to address their unique challenges. By recognizing their interconnectedness, software engineers devise comprehensive strategies that optimize both aspects, ensuring robust and efficient systems that meet evolving demands.

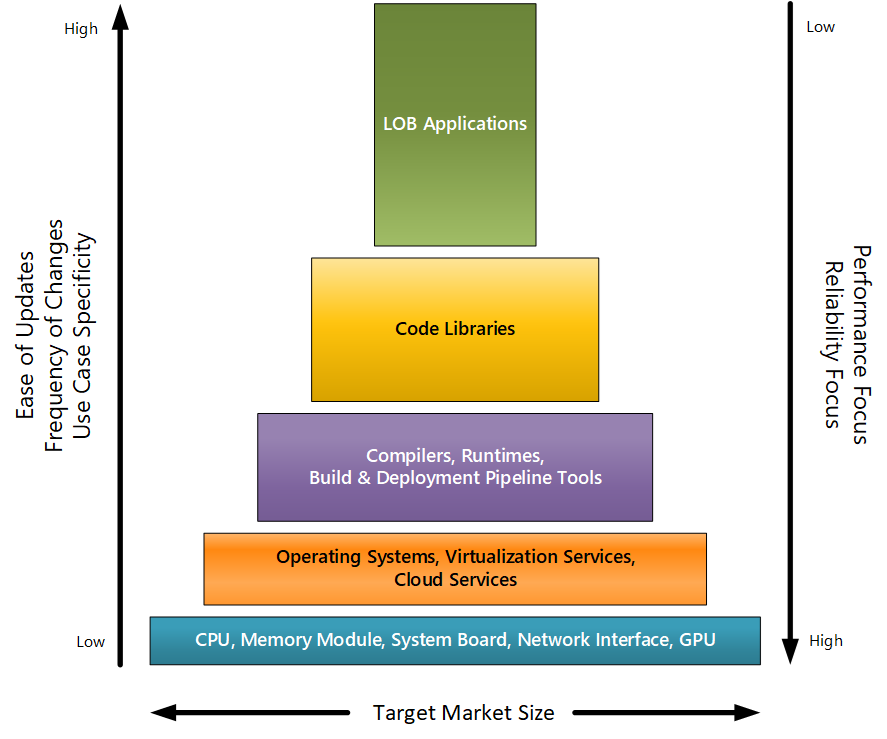

Target Market Correlation to Performance Focus

The size and diversity of a customer base significantly influence a system’s reusability and dynamism. The target technology market focus is notably horizontal at the hardware and firmware level. CPUs, memory modules, and system boards are designed to run various operating systems across countless industries, ensuring broad compatibility and static functionality.

Operating systems and virtualization services are intermediaries between hardware components and applications. They are designed to be versatile and compatible with a wide range of hardware platforms, supporting various industries and use cases. Operating systems provide the necessary environment for applications to run efficiently while managing resources such as memory, CPU, storage, and I/O operations.

In addition to these robust operating systems and virtualization services, cloud services offer more advanced functionalities, enabling even greater scalability and flexibility in addressing industry-specific demands. As cloud services further augment and extend the capabilities of the underlying operating systems and virtualization layers, the differentiation between various services begins to blur.

Development tools, languages, and compilers further highlight this trend. These tools are crafted to serve a broad customer base, enabling the development of diverse applications. While some programming languages are tailored to specific industries or use cases, the overarching goal is to provide versatile and reusable tools that can be applied across multiple domains.

Reusable library authors are positioned in the middle ground, targeting both vertical and horizontal use cases. Libraries are designed to function within specific runtimes and ecosystems, yet they provide standard functionalities applicable to various applications. This balance ensures that libraries remain adaptable while catering to many frequent use cases.

On the other hand, line-of-business (LOB) solutions are the epitome of a vertical focus. These software solutions address specific use cases and problems, demanding highly dynamic and fluid functionalities. Their ever-evolving nature requires constant adaptation and refinement, which benefits from the vertical nature of the development environment.

LOB Performance

Although it is somewhat reasonable and expected to prioritize dynamism over fine-grained performance in highly dynamic code, the industry has become increasingly complacent about performance optimization. It has shifted dramatically away from the performance side of the scale and focuses too heavily on rapid feature rollout. This significant shift is costly and requires immediate attention to rebalance adaptability and efficiency.

The demand from customers and businesses for new features and rapid market delivery has led engineering teams to overlook often simple yet highly effective design and implementation techniques that enhance performance.

The demand from customers and businesses for new features and rapid market delivery has led engineering teams to overlook often simple yet highly effective design and implementation techniques that enhance performance. This situation creates a paradox where customers and businesses simultaneously expect high system performance and cost efficiency. Focusing on delivering features and fixing bugs as quickly as possible while overlooking simple performance techniques results in suboptimal system performance and dissatisfaction among all stakeholders. This leads to a pervasive sense of tolerating mediocrity.

Specific performance targets are rarely specified as requirements in line-of-business applications. The engineering teams must assume responsibility for prioritizing this non-functional requirement; otherwise, the lack of performance becomes a burdensome technical debt that grows over time.

Multiple layers of system performance and scalability must be considered to ensure the development of a comprehensive system that is both scalable and high-performance. This includes examining high-level architectural perspectives as well as detailed implementation aspects.

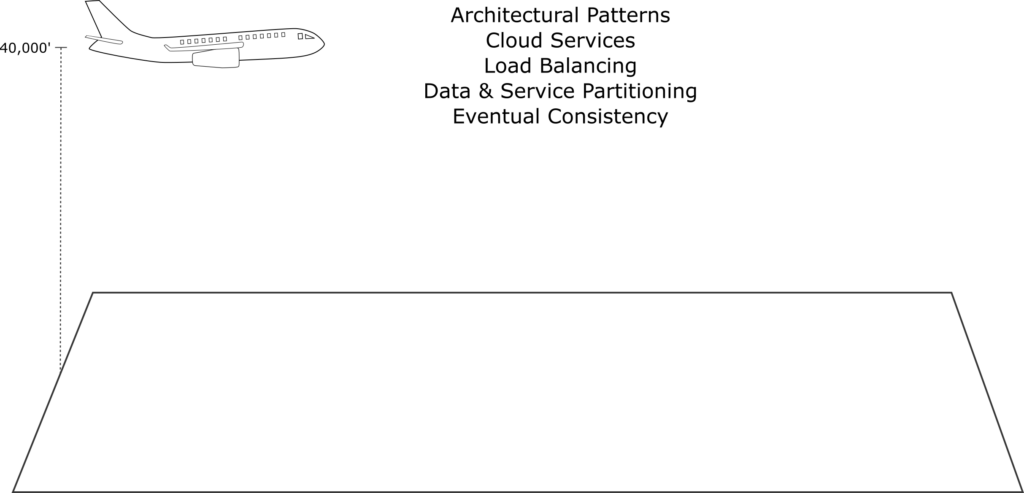

The 40,000-Foot View: Cloud Services, Architectural Patterns, and Deployment Environments

From the highest vantage point, performance management involves leveraging third-party products and cloud services to distribute and balance loads effectively. This includes breaking up data, services, and traffic into distinct partitions to ensure scalability and reliability. Architectural patterns, such as eventual consistency and asynchronous operations, enable systems to handle large volumes of data and user requests without compromising speed or accuracy.

In a very high-level approach to performance and scalability, several key aspects are reviewed:

Architectural and High-Level Design Patterns

Architectural patterns such as microservices, serverless architecture, and containerization are integral to achieving performance and scalability. These patterns help decouple components, thus making the system more resilient and straightforward to scale. High-level design patterns, such as CQRS (Command Query Responsibility Segregation) and Event Sourcing, further enhance the system’s ability to manage complex data flows and ensure data integrity.

Typical Cloud Services Utilized

Cloud services such as AWS, Azure, and Google Cloud provide a robust foundation for scalable applications. These services offer tools and platforms, including managed databases, serverless functions, messaging systems, and container orchestration services. They also provide advanced monitoring and analytics tools to track performance and optimize resource usage.

Load Balancing

Effective load balancing ensures that user requests are evenly distributed across nodes and geographies, preventing any single server from becoming a bottleneck. Balancing involves directing user traffic to the closest or least loaded server or even implementing a simple round-robin distribution, thereby reducing latency and improving the user experience.

Partitioning Data and Services

Partitioning involves dividing a dataset or service into smaller, manageable pieces. This is achieved either horizontally (sharding), where each partition holds a subset of the data, or vertically, where each partition contains different data types. Partitioning is crucial for handling large-scale applications, as it allows parallel processing and reduces the load on individual servers & services.

Utilizing Eventual Consistency Models

Eventual consistency models ensure that all replicas of a dataset will eventually converge to the same value, even though updates are distributed across different nodes. This model is advantageous in distributed systems where immediate consistency is not feasible due to the need for scalability and responsiveness. Conflict resolution and versioning are employed to manage inconsistencies and ensure data reliability.

By integrating these elements, this high-level approach to performance and scalability ensures that systems are robust, adaptable, and easily handle growth and complexity.

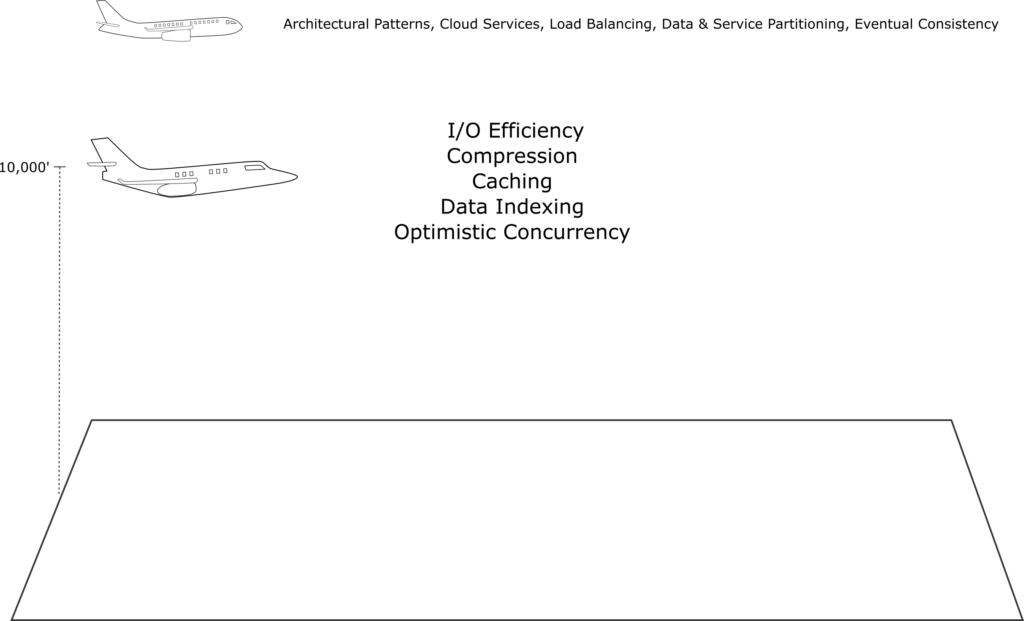

The 10,000-Foot View: Optimizing I/O and Data Access

As we descend to a closer view, the focus shifts to individual I/O (Input/Output) and data access operations. Engineers scrutinize how data is read, written, and processed at this level, aiming to optimize these operations for better performance.

Utilizing indexes is fundamental to accelerating data retrieval. They allow databases to locate and access rows quickly without scanning entire tables or partitions. Indexes significantly improve query performance, reducing the time required to fetch data.

Another crucial aspect is optimistic concurrency control, which ensures that multiple transactions occur without conflict, thus enhancing system responsiveness. This method assumes that conflicts are rare and checks for data integrity only at commit time, allowing smoother data access.

Distributed caching stores reduce the need to fetch data repeatedly from long-term storage. Effective caching strategies dramatically boost throughput and lower latency delays.

Compression techniques minimize the data transfer size, enabling faster I/O operations and reducing storage requirements. By compressing data, systems handle higher volumes of transactions efficiently. Furthermore, it is vital to minimize the frequency of network calls and the size of payloads associated with I/O operations. Reducing the number of calls lowers overhead, while smaller payloads ensure quicker data transfer, enhancing overall application performance.

The 1,000-Foot View: Algorithm Efficiency

Closer still, at the 1,000-foot view, we delve into the intricacies of application algorithms. Here, time and space complexities are analyzed to ensure that algorithms run efficiently. Parallel execution flows and concurrency management are essential in this context, ensuring that threads are coordinated to prevent conflicts and inefficiencies.

When multiple threads access shared resources, techniques such as locks, semaphores, and atomic operations are implemented to handle race conditions and maintain data integrity. Local in-process/in-memory caching further optimizes performance by storing frequently accessed data in memory, reducing the need for repeated data retrieval from slower storage mediums or even distributed caches.

Asynchronous I/O operations play a crucial role in enhancing system responsiveness. By allowing I/O operations to proceed independently of the central processing threads, the system executes other tasks without waiting for I/O operations to complete. This approach significantly improves the overall throughput and scalability of the application.

The 100-Foot View: CPU and Memory Efficiency

At the most detailed level, the 100-foot view, performance optimization focuses on CPU and memory usage efficiency. Engineers consider how to maximize the finite computing resources available in every operation. This involves fine-tuning processes to minimize CPU cycles, cache misses, memory consumption, and traffic. Ensuring that every aspect of the system runs smoothly.

Selected algorithms require optimization, focusing on utilizing the fewest resources possible. This requires careful profiling and benchmarking to identify bottlenecks and maximize code path efficiency.

Engineers should minimize the overhead associated with thread context switches and synchronization by using lightweight synchronization primitives and avoiding excessive locking. Techniques such as lock-free algorithms and fine-grained locking are frequently employed to achieve this. Careful workload distribution among threads ensures optimal resource utilization, keeping CPU and memory consumption in check.

Memory consumption must be optimized by using memory-efficient data structures and algorithms. Techniques like pooling, recycling, and avoiding unnecessary allocations can significantly reduce memory usage. Engineers should also be mindful of cache locality and design data structures that optimize CPU caches to enhance performance, thereby minimizing memory bandwidth and latency.

At this level, the focus is on maintaining the efficiency of each node, thereby reducing the need to scale vertically or horizontally. Ensuring that each component operates at peak efficiency minimizes the system’s overall resource requirements, resulting in cost savings and improved performance. This involves a holistic approach to resource management, scrutinizing every detail to ensure the system runs as smoothly and efficiently as possible.

The Overlooked Perspective

Different teams and companies emphasize performance optimization to varying degrees, influenced by several factors. The specific use cases or products being developed, customer requirements, and the engineering teams’ expertise often determine the level of focus on performance details. For instance, in line-of-business (LOB) applications that frequently change, it is understandable that the intensive performance scrutiny applied to operating systems or hardware is not feasible. Nonetheless, this does not justify completely disregarding the importance of managing low-level performance, as there are numerous simple and effective methods to prevent poor performance through adequate education and understanding.

…there are numerous simple and effective methods to prevent poor performance through adequate education and understanding.

As competition intensifies, the demand for higher performance standards from line-of-business (LOB) applications increases. However, many engineers increasingly rely on cloud services, third-party products, and architectural patterns as singular solutions for enhancing performance and scalability. In my view-level diagrams, the detailed analysis at the 100ft level is largely overlooked, and even the 1,000ft level is inadequately considered.

The timing of this shift away from lower-level performance optimizations is both ironic and unfortunate, given that our tools, languages, and runtimes have advanced significantly. These advancements offer sophisticated tools, techniques, and language features that enable us to maintain a strong focus on performance at the lowest levels with minimal effort.

The Core of Every System

The core of every complete software system is executing code, from the straightforward desktop application to the most extensive multi-cloud global software services. Regardless of how sophisticated the architecture is or how advanced the cloud services and scalable patterns used are, executing code remains the system’s heartbeat. Poorly performing code undermines even the most meticulously designed systems.

Consider a scenario where the code does not effectively manage computing resources. This inefficiency will impact vertical scaling by necessitating nodes with more substantial computing resources, thereby driving up costs and reducing the system’s overall efficiency. Furthermore, it affects horizontal scaling by requiring more frequent scale-outs to handle the same workload, increasing operational expenses. These inefficiencies consume financial resources rapidly, rendering the advantages of the best architecture and latest cloud services moot.

Effective code execution ensures optimal resource utilization, which is crucial for maintaining both performance and cost efficiency. Every operation, function, and process must be thoroughly scrutinized and optimized to prevent resource wastage and ensure the system operates as intended. Through careful management of computing resources, the integrity and efficiency of the software system are preserved, allowing it to scale and perform in a manner that meets the demands of its users.

Lower the View and Raise the Bar

When I began working with the .NET Framework 1.1 in 2004, the performance was dismal. The memory footprint for a simple “Hello World” application was surprisingly large. At that time, the primary advantage over C++ was related to integrated memory management and an object reference model, eliminating the need for pointer use or manipulation. This advancement came with the assurance of preventing memory leaks and bad pointer access violations. Furthermore, the framework encompassed a valuable base class library, and fundamental concepts such as enumerables and other common constructs were integral components. I was also surprised by the popularity of “XCOPY deployment,” but this was nonetheless a significant selling point for many at the time.

During this period, mainstream multi-threaded applications were relatively novel, and few developers had experience with concurrency management, such as semaphores and critical section objects in C and C++ applications. From its inception, the .NET Framework incorporated multi-threading concepts, addressed concurrency, and featured a thread pool from the first release. Despite these attractions for engineers, performance was not considered a strong point.

Although each new release improved various aspects of the framework, .NET was not associated with good performance until the introduction of .NET Core (now simply “.NET”) more than ten years later. With .NET Core, performance saw dramatic improvements and continues to enhance with each successive release. Today, .NET offers extensive functionality and language features that enable the creation of high-performance applications. Only the most performance-sensitive systems require C, C++, or Assembly development to compete, and even then, it necessitates highly skilled and experienced engineers to achieve the highest performance in those environments.

Today, the productivity benefits of working with a managed system, a vast library ecosystem, and advanced runtime and compiler performance capabilities make .NET an obvious choice for numerous applications across various industries. However, engineers must demand more from themselves to utilize many underutilized features and start focusing on performance, as we did when using native code-generating languages and tools.

The .NET Performance Dichotomy

As .NET has evolved, it has dramatically enhanced the ability to create high-performance code. Concurrently, languages like C# have introduced numerous “syntactic sugar” niceties—coding shortcuts that simplify development tasks. However, these conveniences often come with a performance cost. Newer developers naturally gravitate towards these language features for ease of use, while even seasoned developers increasingly rely on them.

This trend poses a significant challenge: while the .NET ecosystem continues to offer advanced tools and techniques to enhance code performance, engineers are becoming less adept at utilizing these methods or recognizing patterns that negatively impact performance. The balance between leveraging syntactic shortcuts and maintaining optimal performance is delicate and requires a deep understanding of the language and the underlying runtime.

For instance, features like anonymous methods, LINQ collection manipulation, iterators, closures, and even async/await patterns simplify code but often introduce overhead when not used judiciously. Engineers must be vigilant and ensure they are not sacrificing efficiency for convenience, undermining the potential of .NET’s performance capabilities.

Engineering teams must balance adopting modern language features and prioritizing performance optimization. Continuous education and training on best practices, performance profiling, and code reviews help bridge this gap. By fostering a culture of performance awareness and skill enhancement, teams fully leverage the power of .NET and C# to deliver high-performing applications that meet the demands of today’s competitive market.

Call to Action

As software engineers, we constantly strive for innovation, pushing the boundaries of what technology can achieve. Yet, amidst this relentless pursuit, we must not lose sight of one of our core responsibilities: ensuring our applications run efficiently and deliver optimal performance. This responsibility is more critical today than ever as the demand for responsive and resource-efficient software intensifies.

We must adopt a proactive approach emphasizing performance from the outset to rise to this challenge. Here are some key actions software engineers need to take to refocus on high performance and computing resource efficiency:

- Educate and Train Continuously: Invest in regular training sessions and workshops that cover the best practices for performance optimization, including profiling, benchmarking, and code reviews. Stay updated with the latest advancements in .NET and other relevant technologies to leverage their full potential.

- Profile Early and Often: Utilize performance profiling tools to analyze your code’s execution. Identify bottlenecks and areas for improvement before they become critical issues. Implement performance benchmarks as part of your development and testing processes.

- Review and Refactor Code: Conduct thorough code reviews to ensure that performance considerations are factored in. Encourage a culture of constructive feedback that prioritizes efficiency and resource management.

- Optimize Algorithms and Data Structures: Select the most suitable algorithms and data structures for your specific application. Understand their complexity and impact on performance. Make informed decisions to enhance efficiency.

- Adopt Efficient Coding Practices: Be mindful of the performance implications of language features and coding shortcuts. Use them judiciously and avoid over-reliance on syntactic sugar that introduces overhead.

- Leverage Advanced .NET Features: Harness the power of .NET’s advanced features, such as asynchronous programming, parallel processing, value types, stack allocations, Span<T> usage, memory pools, and efficient memory management. Ensure you understand their inner workings to use them effectively.

- Monitor and Measure Resource Utilization: Monitor your application’s resource usage, including memory, CPU, and I/O operations. Implement metrics and logging to gain insights into performance trends and make data-driven decisions.

- Foster a Performance-Aware Culture: Promote a team-wide culture that values performance optimization. Encourage collaboration and knowledge sharing to raise the bar for high-performance software development collectively.

By taking these steps, we ensure that our software meets and exceeds users’ expectations. It’s time to embrace the challenge and re-prioritize performance as a fundamental aspect of our development process.

Summary

To optimize software performance, we must adopt a holistic approach that considers all perspectives—from broad architectural strategies to fine-grained resource management. By doing so, we ensure that our systems are functional, efficient, reliable, and scalable. Raise the bar and deliver the performance our customers deserve.

References

Non-Functional Requirements: https://kzdev.net/crucial-role-of-non-functional-requirements-in-software-quality/

Technical Debt: https://kzdev.net/technical-debt-charges-interest/

Microservices: https://en.wikipedia.org/wiki/Microservices

CQRS: https://learn.microsoft.com/en-us/azure/architecture/patterns/cqrs

Event Sourcing: https://learn.microsoft.com/en-us/azure/architecture/patterns/event-sourcing

Data Partitioning: https://learn.microsoft.com/en-us/azure/architecture/best-practices/data-partitioning

Eventual Consistency: https://en.wikipedia.org/wiki/Eventual_consistency

Optimistic Concurrency: https://en.wikipedia.org/wiki/Optimistic_concurrency_control

Distributed Caching: https://www.geeksforgeeks.org/what-is-a-distributed-cache/

Algorithm Complexity: https://www.geeksforgeeks.org/complete-guide-on-complexity-analysis/

Context Switches: https://learn.microsoft.com/en-us/windows/win32/procthread/context-switches

Vertical and Horizontal Scaling: https://www.geeksforgeeks.org/system-design-horizontal-and-vertical-scaling/