Understanding and Emphasizing the Often-Overlooked Aspects of Software Engineering

In my last posts, I discussed the Circle of Influence. I showed how your commitment to quality impacts the product, customer, company, and personal and professional well-being. Beyond professional pride in doing a great job, the quality you and your team commit to determines your work environment and level of job satisfaction.

A largely overlooked aspect of software quality is the impact of non-functional requirements (NFRs). This post and the next discuss how paying close attention to these NFRs significantly enhances software products’ quality, performance, and longevity while keeping teams agile and responsive over the long run. Conversely, neglecting them leads to numerous challenges for customers and engineering teams.

What are Non-Functional Requirements

In the ever-evolving world of software development, the focus on meeting specific requirements and acceptance criteria often overshadows the equally critical non-functional requirements. While functional requirements define what the software should do, non-functional requirements outline how the software should perform under various conditions and the general quality of the source code and other related artifacts. Attention to these aspects dictates the ability of the artifacts to evolve quickly over time to handle minor and seismic shifts in the technology and requirements landscapes. NFRs are often appropriately referred to as quality attributes or software quality attributes.

non-functional requirements outline how the software should perform under various conditions and the general quality of the source code

Before delving deeper into the importance and implications of these non-functional requirements, it is valuable to briefly enumerate and introduce the typical NFRs discussed in software engineering. Understanding these key attributes helps you better appreciate their role in shaping a robust, high-quality software product.

Depending on the company and the individual teams, some of these NFRs are treated as explicit requirements or acceptance criteria, which is fantastic when they are. However, with many Line-of-Business (LOB) systems, these concepts are not strictly specified as requirements, and it is often up to the teams to determine the level of importance they will apply to them.

Here are some crucial NFRs that are often overlooked (this is a partial list of commonly known NFRs and is certainly not exhaustive):

- Performance: Ensuring the system meets performance criteria such as response time, throughput, and resource utilization.

- Scalability: The ability of the system to handle increased load by adding resources, and implementing various design patterns.

- Security: Protecting the system from unauthorized access, data breaches, and other security threats.

- Supportability: How well the system is designed to provide customer support, quickly identify and resolve issues, and proactively identify potential problems.

- Reliability: Ensuring the system operates consistently and accurately over time.

- Maintainability: The ease with which the system can be updated, modified, and maintained; also impacts testability.

- Portability: The ability of the system to run on different platforms and environments.

- Compliance: Adhering to relevant laws, regulations, and standards.

- Interoperability: Ensuring the system can work with other systems and software.

- Availability: Ensuring the system is accessible and operational when needed.

- Tested and testable: Ensuring that the code is tested and written in a testable manner; closely related to maintainability.

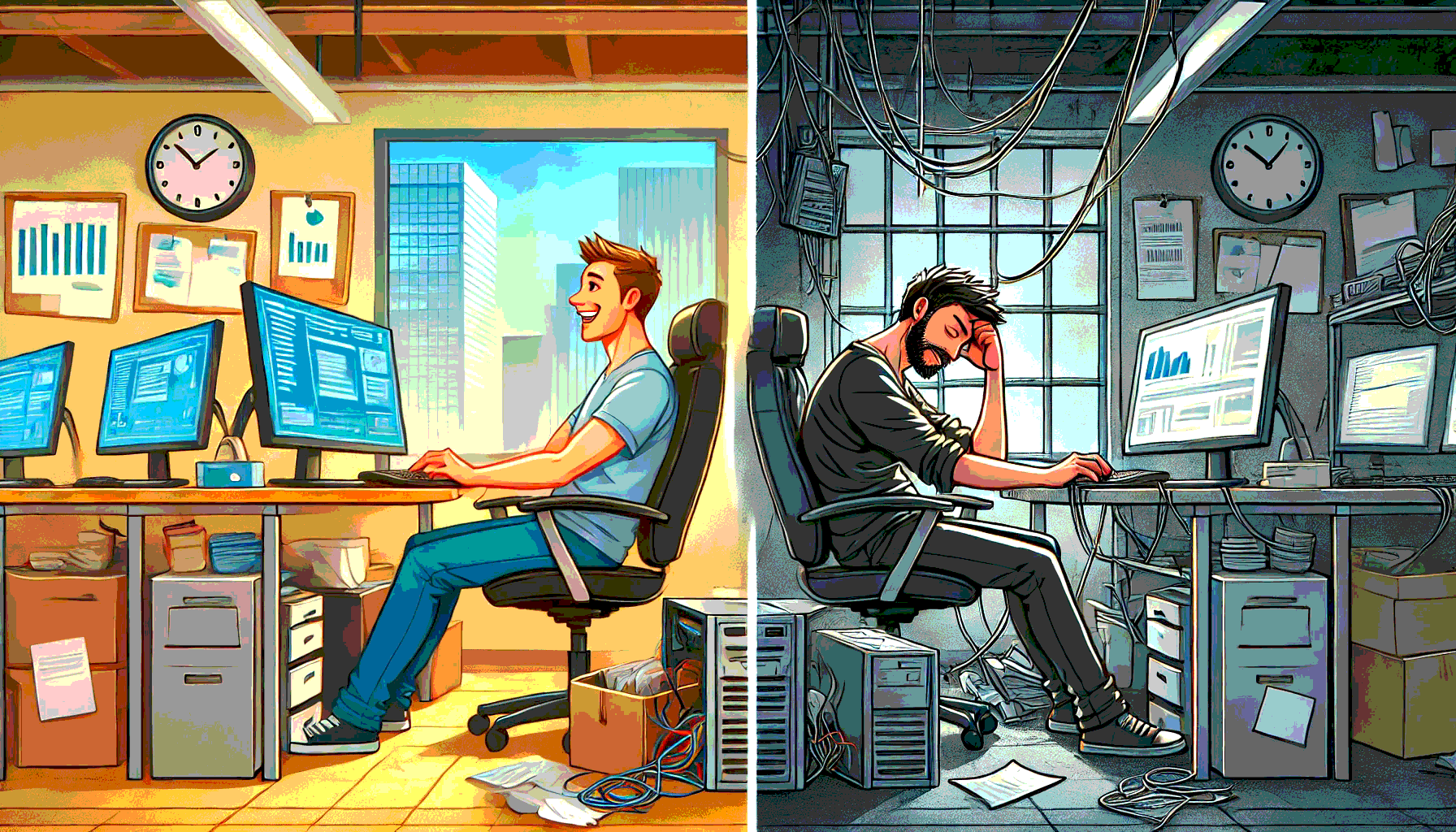

Teams that emphasize the importance of NFRs are more efficient and productive and produce better products. By prioritizing these crucial aspects, teams avoid common pitfalls and reduce the need for extensive rework and troubleshooting. This proactive approach leads to a more streamlined development process, higher quality software, and a more satisfied and motivated team.

Teams that emphasize the importance of NFRs are more efficient and productive and produce better products.

When engineers see their efforts result in a stable, secure, and user-friendly product, they are more satisfied and feel pride and ownership in their work. Focusing on NFRs ensures that the final product meets functional requirements and excels in performance, reliability, and usability, creating a better overall outcome for the team and the end users.

As we delve deeper into software engineering, it will become increasingly evident that focusing on Non-Functional Requirements (NFRs) is paramount to achieving excellence. Many of my future blog posts will explore various strategies and methodologies to implement quality by prioritizing these NFRs. Each post will provide insights and practical approaches to ensure the software meets its functional objectives and excels in performance, security, maintainability, and reliability.

I will provide a foundational introduction to these critical NFRs and illustrate their indispensable roles in enhancing software quality. Understanding these elements is the first step towards creating robust, user-friendly, high-performing software products.

Performance

Performance is a critical aspect of software development, though it is often conflated with scalability. While both are closely related and directly impact one another, they are distinct concepts. Performance typically encompasses metrics such as response times and throughput, ensuring that the system operates efficiently under specified conditions and is responsive to users. However, it also includes resource utilization, such as CPU and memory usage, which is frequently overlooked, misunderstood, or not given proper attention.

In managed languages like .NET, developers have become increasingly lax in paying sufficient attention to these aspects. In the early days of the .NET framework, there were limited options to manage resource utilization effectively. However, with modern .NET Core applications, this is no longer the case. Current .NET Core offers extensive tools and methodologies to optimize resource management, making it inexcusable to be cavalier about these resource utilization considerations. Properly managing CPU and memory usage is crucial for maintaining high performance, particularly in applications expected to scale.

When thinking in a Cloud-First mindset, resource utilization is a crucial aspect that directly impacts both the vertical and horizontal scaling impacts on cloud operational costs, and these impacts map directly to similarly running applications in back-office and data-center servers.

Understanding and prioritizing performance and scalability is essential for developing robust, high-performing software. Engineers ensure that their applications meet current performance requirements and adapt to future demands by optimizing resource utilization and designing systems to scale effectively. This dual focus ultimately leads to a more efficient, reliable, and user-friendly product.

Scalability

Scalability refers to a system’s ability to handle predictable and unpredictable spikes in load and simultaneous requests without suffering performance degradation. Achieving scalability often involves partitioning systems and data, using asynchronous and eventual consistency patterns, optimizing communications and data accesses, and scaling out when multiple nodes are needed to handle the load.

Vertical and horizontal scaling needs are directly driven by how well the system utilizes and manages resources on each node, as discussed in the abovementioned performance topic.

When the demand surpasses the capacity of a single node, scaling out becomes necessary. This involves adding more nodes to the system, distributing the load, and maintaining system performance. Effective scaling out ensures that the system remains responsive and reliable, even as the number of users or data volume grows.

Vertical scaling, on the other hand, focuses on enhancing the resources dedicated to each node. The system can handle more intensive workloads by increasing the node’s CPU, memory, or storage capacity. However, efficient resource utilization during the development phase helps reduce the size of each node, minimizing the need for extensive vertical scaling. This lowers the costs associated with high-powered nodes and minimizes the frequency of horizontal scale out and the number of additional nodes required to manage the load.

Incorporating these scalability strategies into the development process ensures the system can adapt to current and future demands, providing a seamless and efficient user experience. By balancing vertical and horizontal scaling and optimizing resource utilization, engineers create robust, high-performing software capable of handling diverse and fluctuating workloads, in a much more cost-effective manner.

Security

Security in software development extends far beyond implementing multi-factor authentication (MFA) and utilizing Transport Layer Security (TLS) in network communications. While these measures are fundamental, a genuinely secure system must address several other critical aspects to protect data and ensure robust security across the entire application lifecycle.

One key consideration is how data is stored in persistence. Encrypting data at rest is essential to protect sensitive information from unauthorized access, especially in the event of a data breach. This involves using strong encryption algorithms and regularly rotating encryption keys to maintain data security.

Another critical aspect that is often overlooked is the management of in-process memory. Properly clearing unused memory reduces the risk of data leaks and unauthorized access. Engineers should employ secure coding practices to ensure that sensitive data is not inadvertently exposed in memory and that memory is correctly sanitized after use. In most cases, the .NET CLR manages this for you, but it is essential to be diligent in managing those edge cases when needed.

User permissions and access controls are also crucial components of a secure system. Implementing the principle of least privilege ensures that users only have access to the resources necessary for their roles, minimizing the risk of unauthorized actions. This is particularly important in multi-tenant applications, where isolating tenant data and enforcing strict access controls prevent data breaches and maintain the integrity of each tenant’s information.

Supportability

Supportability is a fundamental aspect of system design, ensuring the system can be maintained and supported efficiently over its lifecycle. Implementing robust observability practices is key to achieving this, as it enables quick identification and resolution of issues within the system. Incorporating comprehensive logs, tracing, and metrics into the codebase provides continuous insights into the system’s behavior, facilitating proactive monitoring and troubleshooting.

Logs are crucial in recording system events, errors, and other significant occurrences, which can be analyzed to diagnose and resolve issues swiftly. Tracing helps track the flow of requests and interactions across different system components, allowing engineers to pinpoint the root cause of performance bottlenecks or failures. Metrics offer quantitative data on various aspects of system performance, making it easier to identify trends, anomalies, and areas requiring optimization.

Careful implementation of observability within the codebase ensures that different areas and sections of code can be easily correlated with corresponding information in the observability archives. This correlation simplifies locating and addressing issues, reducing downtime and enhancing the system’s reliability.

Moreover, well-integrated observability provides valuable insights into areas causing performance or scalability concerns. Engineers can analyze logs, traces, and metrics to identify inefficiencies, optimize resource usage, and improve overall system performance. This, in turn, supports the scalability strategies discussed earlier, ensuring the system remains responsive and cost-effective.

Solid and reliable customer support is another critical component of supportability. With effective observability, support teams can access detailed diagnostic information to assist users promptly and accurately. This enhances user satisfaction and builds trust in the product, contributing to its long-term success.

Reliability

Reliability in software systems encompasses several key aspects, including the ability to function correctly under different conditions, maintain performance over time, and recover gracefully from failures. Engineers must implement best practices to achieve high reliability and pay diligent attention to detail throughout the software development lifecycle.

Best practices such as comprehensive testing, data and input validation and verification, robust error handling, and fallback patterns are vital in enhancing software reliability. Thorough testing ensures the software is free from critical bugs and performs well across different scenarios. Data and input validation and verification involve checking that input data meets predefined criteria and is error-free, ensuring that only valid and expected input is processed. Robust error handling ensures the system can gracefully manage and recover from errors, providing informative feedback to users and maintaining operational stability. Fallback patterns offer alternative methods for system functionality when primary methods fail, enhancing resilience and maintaining a seamless user experience.

By adhering to these practices, development teams create robust systems that withstand various operational challenges. High reliability not only boosts customer confidence and satisfaction but also reduces the frequency and severity of incidents. This, in turn, eases the burden on support and engineering teams, allowing them to focus on innovation and continuous improvement rather than firefighting issues.

A highly reliable system fosters a positive user experience, encouraging customer loyalty and trust. For engineering teams, it means fewer distractions from unplanned work and more time to enhance system capabilities. Reliability is a cornerstone of a successful software system, benefiting customers and the teams building and supporting it.

Maintainability

Maintainability is pivotal for the longevity and success of any software system. It refers to the ease with which a system can be modified to correct faults, improve performance, or adapt to a changed environment. Following established best practices and development principles significantly enhances maintainability. For example, the fundamental principles of SOLID, KISS, DRY, and YAGNI keep code more maintainable.

In addition to these principles, keeping cognitive and cyclomatic complexity in code at low levels is crucial for maintainability. Cognitive complexity refers to the mental effort required to understand the code, while cyclomatic complexity measures the number of linearly independent paths through the code. Lower complexity levels make the system easier to comprehend, test, and modify. Reducing complexity also decreases the likelihood of introducing errors and enhances the overall quality of the software.

Consistent code structure with diligent and concise use of comments is also vital for keeping code highly maintainable and contributing to keeping cognitive complexity low. The principles of Clean Code are also very valuable in creating and evolving maintainable code. Highly maintainable code tends also to be far more testable.

Teams should establish a common, agreed-upon coding standard for code structure and the employment of all the concepts listed here and any others that apply to specific business domains, engineering teams, and companies. Consistency in applying and adhering to the principles is as important as their value alone.

Portability

Software portability allows it to be transferred from one environment to another with minimal modification. Creating highly portable code involves adhering to specific practices and principles, such as avoiding or isolating platform-specific features, using standard libraries and APIs, and abstracting hardware and operating system dependencies.

Creating portable code has many benefits. It allows software to be deployed across various environments, including different operating systems, hardware configurations, and network topologies. Moreover, portability simplifies maintenance and upgrades, as changes can be implemented once and propagated across all supported environments, reducing the effort and cost of managing multiple codebases.

Additionally, portable code enhances software longevity, making it easier to adapt to emerging technologies and platforms. This adaptability ensures that the software remains relevant and functional over time, protecting the investment in its development. For engineering teams, portability reduces the complexity and risk associated with platform migrations, enabling a smoother transition and quicker adaptation to new environments.

Portable code also simplifies the movement to cloud deployments and the ability to be deployed to potential diverse cloud platforms and services.

Compliance

Compliance refers to adhering to laws, regulations, standards, and ethical practices that govern the usage and deployment of software systems. Even when not explicitly stated in business requirements, ensuring compliance is essential, as it helps prevent legal issues, fosters user trust, and protects against security breaches.

Legal and Regulatory Obligations: Software systems often operate within a framework of laws and regulations that dictate specific standards and practices. Failure to comply with these can lead to legal repercussions, substantial fines, and loss of reputation. For instance, data protection laws such as the General Data Protection Regulation (GDPR) in the European Union mandate strict guidelines on how personal data must be handled and protected. Ignoring such regulations can expose organizations to significant legal risks.

User Trust and Confidence: Compliance is crucial in fostering user trust and confidence in a software product. Users are more likely to engage with and rely on software that they perceive as secure and ethical. By adhering to compliance standards, engineers demonstrate their commitment to safeguarding user data and ensuring a reliable user experience, enhancing user satisfaction and loyalty.

Prevention of Security Breaches: Non-compliance with security standards can lead to vulnerabilities that malicious actors can exploit. Meeting compliance requirements helps establish robust security measures that protect the software from threats and breaches. This proactive stance minimizes the risk of data breaches, cyber-attacks, and other security incidents that could compromise the integrity of the software and the data it handles.

Compliance and security NFRs become challenging to integrate into the system when they aren’t considered very early and in every development aspect. Integrating these NFRs late into the product development cycle requires a lot of refactoring and costly productivity hits and will almost always be fragile and susceptible to costly errors.

Interoperability

Interoperability is a key non-functional requirement (NFR) that ensures that different software systems and components work together seamlessly. Achieving interoperability involves adhering to standard data formats and communication protocols to facilitate smooth system interactions. This practice simplifies integration efforts and enhances the software product’s flexibility and scalability.

Utilizing standard data formats such as JSON or XML, as well as widely accepted communication protocols like HTTP/HTTPS, gRPC, or WebSockets, aids in establishing a consistent and reliable exchange of information. However, robust error handling and data translation layers should also be underscored. These layers act as a buffer, insulating the core business logic from the intricate details of external systems and data persistence constraints. By managing errors gracefully and translating data effectively, these layers ensure the system remains resilient and adaptable, even in the face of unexpected changes or disruptions in the external environment.

Availability

Availability ensures a software system is accessible and operational whenever users need it. Highly available systems are designed to minimize downtime and maximize uptime, thereby providing a reliable and consistent user experience.

From the customer’s perspective, highly available systems are perceived as trustworthy and dependable. When users can consistently access a service without interruptions, their confidence in the product and the business behind it increases significantly. This unwavering accessibility fosters customer loyalty and enhances the business’s reputation, as customers associate the brand with reliability and excellence.

For engineering teams, highly available systems translate to less time spent on firefighting and emergency troubleshooting to keep the system running. Instead, engineers focus on productive tasks like feature development, performance optimization, and innovation. This shift not only improves the overall quality and functionality of the software but also boosts team morale and efficiency, as engineers channel their skills and creativity into building a better product rather than constantly managing system failures.

Designing systems with fallbacks, replication, redundancies, and partitioning functionality are a few techniques to keep systems highly available.

Testability

Developing highly testable code, where automated tests are quickly written and different scenarios are adequately tested, is essential for increasing code confidence and boosting productivity. This approach allows engineers to verify that components work as expected in isolation, minimizing bugs and errors. Early identification and resolution of issues lead to a more stable and reliable product.

Automated testing upholds high-quality standards without slowing development. Frequent and consistent execution of these tests provides immediate feedback, ensuring new changes don’t introduce regressions. Consequently, engineers innovate and iterate rapidly, backed by a solid test foundation.

Maintaining well-structured, modular, and easily understandable code simplifies test creation and debugging, significantly contributing to testability. Clean code also allows engineers to isolate and address issues quickly, enhancing testing efficiency.

Adopting established design patterns and best practices, such as dependency injection and separation of concerns, promotes testability by decoupling components and reducing dependencies. These practices facilitate mocking or stubbing the system parts during testing, focusing on specific code behaviors.

Prioritizing testability by incorporating maintainability and following best practices enhances code confidence, productivity, and the overall robustness of the software. Automated tests for new code also become immediate regression tests for future changes, ensuring ongoing system reliability.

Complementary and Conflicting Requirements

Many non-functional requirements (NFRs) complement and reinforce each other. For instance, enhancing performance often goes hand in hand with improving scalability, as optimized code and efficient resource management enable a system to handle increased loads easily. Similarly, testability and maintainability are intrinsically linked; well-structured, modular code is more straightforward to test thoroughly and simpler to maintain over time. Engineers create robust systems that excel in multiple dimensions by adopting best practices that promote these qualities.

Many non-functional requirements (NFRs) complement and reinforce each other…..sometimes NFRs present conflicting priorities that require careful balancing.

However, sometimes NFRs present conflicting priorities that require careful balancing. For example, in C#, features that support writing simple but expressive code usually come at the expense of performance. Conversely, writing highly performant C# code often requires less common syntax, increasing cognitive complexity and negatively impacting maintainability. This apparent conflict between performance and maintainability exemplifies where engineering becomes both art and science.

Highly skilled and experienced engineers can adeptly navigate these conflicts, finding a balance that maximizes performance without sacrificing maintainability. By leveraging their expertise, they implement solutions that mitigate the downsides of each approach. Furthermore, training other engineers reduces the cognitive complexity associated with advanced techniques, ensuring that the codebase remains accessible and maintainable for the entire team.

Coming Up Next: The Quality Impact of NFRs

This post briefly addressed well-known and essential non-functional requirements and how they impact your products. Highly available systems boost customer confidence and loyalty and free engineering teams from constant firefighting, allowing them to invest their time in meaningful development activities. Testability is crucial for maintaining exceptional code quality and productivity, ensuring new changes do not introduce regressions and fostering a robust and maintainable codebase.

In the upcoming post, I delve into the dual impacts of NFRs: the positive outcomes of prioritizing these quality aspects and the significant negative consequences of neglecting them. I examine how focusing on NFRs enhances user experience, safeguards customer trust, and ensures software products’ long-term success and resilience.

References

Non-Functional Requirements: https://en.wikipedia.org/wiki/Non-functional_requirement

Circle of Influence: https://kzdev.net/software-engineering-circle-of-influence

Cloud First: https://kzdev.net/cloud-first

SOLID Principles: https://www.freecodecamp.org/news/solid-design-principles-in-software-development

KISS Principle: https://www.geeksforgeeks.org/kiss-principle-in-software-development

DRY Principle: https://www.geeksforgeeks.org/dont-repeat-yourselfdry-in-software-development

YAGNI Principle: https://www.geeksforgeeks.org/what-is-yagni-principle-you-arent-gonna-need-it

Clean Code: https://www.freecodecamp.org/news/how-to-write-clean-code

https://www.amazon.com/Clean-Code-Handbook-Software-Craftsmanship/dp/0132350882